六、部署高可用kube-apiserver集群

1、创建 kubernetes-master 证书和私钥

[root@k8s-master1 work]# cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes-master",

"hosts": [

"127.0.0.1",

"192.168.66.62",

"192.168.66.63",

"192.168.66.64",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local.",

"kubernetes.default.svc.cluster.local."

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "dqz"

}

]

}

EOF- 生成证书和私钥

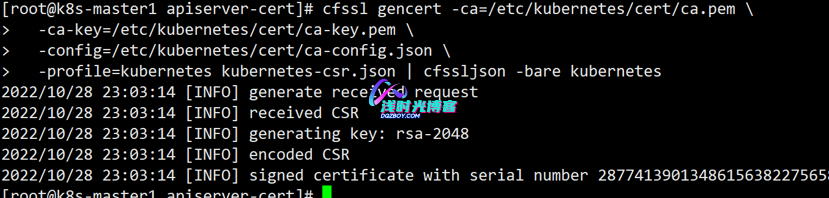

[root@k8s-master1 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

[root@k8s-master1 work]# ls kubernetes*pem

kubernetes-key.pem kubernetes.pem- 将生成的证书和私钥文件拷贝到集群Master节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert"

scp kubernetes*.pem root@${node_ip}:/etc/kubernetes/cert/

done2、创建加密配置文件

- Kubernetes 服务器必须为 1.13 或更高版本

- 重要:对于高可用配置(有两个或多个控制平面节点),加密配置文件必须相同! 否则,kube-apiserver 组件无法解密存储在 etcd 中的数据。

kube-apiserver的参数--encryption-provider-config控制 API 数据在 etcd 中的加密方式

[root@k8s-master1 work]# cat > encryption-config.yaml <<EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

- configmaps

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF- 将加密配置文件拷贝到 master 节点的

/etc/kubernetes目录下

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/

done3、创建审计策略文件

[root@k8s-master1 work]# cat > audit-policy.yaml <<EOF

apiVersion: audit.k8s.io/v1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

EOF- 分发审计策略文件至所有Mater节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp audit-policy.yaml root@${node_ip}:/etc/kubernetes/audit-policy.yaml

done4、创建后续访问 metrics-server 使用的证书

- proxy-client-csr.json文件是用于生成Kubernetes Proxy客户端的证书签名请求(CSR)文件的JSON格式文件

[root@k8s-master1 work]# cat > proxy-client-csr.json <<EOF

{

"CN": "aggregator",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "dqz"

}

]

}

EOF4.2:生成证书和私钥

[root@k8s-master1 work]# cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client

[root@k8s-master1 work]# ls proxy-client*.pem

proxy-client-key.pem proxy-client.pem4.3:将生成的证书和私钥文件拷贝到Master节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp proxy-client*.pem root@${node_ip}:/etc/kubernetes/cert/

done5、为各节点创建和分发 kube-apiserver systemd unit 文件

5.1、创建 kube-apiserver systemd unit 模板文件

[root@k8s-master1 work]# cat > kube-apiserver.service.template <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=${K8S_DIR}/kube-apiserver

ExecStart=/opt/k8s/bin/kube-apiserver \\

--advertise-address=##MASTER_IP## \\

--default-not-ready-toleration-seconds=360 \\

--default-unreachable-toleration-seconds=360 \\

--max-mutating-requests-inflight=2000 \\

--max-requests-inflight=4000 \\

--default-watch-cache-size=200 \\

--delete-collection-workers=2 \\

--encryption-provider-config=/etc/kubernetes/encryption-config.yaml \\

--etcd-cafile=/etc/kubernetes/cert/ca.pem \\

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \\

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \\

--etcd-servers=${ETCD_ENDPOINTS} \\

--bind-address=##MASTER_IP## \\

--secure-port=6443 \\

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \\

--audit-log-maxage=15 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-truncate-enabled \\

--audit-log-path=${K8S_DIR}/kube-apiserver/audit.log \\

--audit-policy-file=/etc/kubernetes/audit-policy.yaml \\

--profiling \\

--anonymous-auth=false \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--enable-bootstrap-token-auth \\

--requestheader-allowed-names="aggregator" \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--service-account-key-file=/etc/kubernetes/cert/ca.pem \\

--service-account-issuer=kubernetes.default.svc \\

--service-account-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

--authorization-mode=Node,RBAC \\

--runtime-config=api/all=true \\

--enable-admission-plugins=NodeRestriction \\

--allow-privileged=true \\

--apiserver-count=3 \\

--event-ttl=168h \\

--kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \\

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \\

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \\

--kubelet-timeout=10s \\

--proxy-client-cert-file=/etc/kubernetes/cert/proxy-client.pem \\

--proxy-client-key-file=/etc/kubernetes/cert/proxy-client-key.pem \\

--service-cluster-ip-range=${SERVICE_CIDR} \\

--service-node-port-range=${NODE_PORT_RANGE} \\

--enable-aggregator-routing=true \\

--v=4

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF5.2:重新命名systemd unit 文件

[root@k8s-master1 work]# for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##MASTER_IP##/${MASTER_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${MASTER_IPS[i]}.service

done

[root@k8s-master1 work]# ls kube-apiserver*.service

kube-apiserver-192.168.66.62.service kube-apiserver-192.168.66.64.service

kube-apiserver-192.168.66.63.service

5.3:分发生成的 systemd unit 文件到所有Master节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-apiserver-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-apiserver.service

done6、启动 kube-apiserver 服务

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-apiserver"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

done7、检查 kube-apiserver 运行状态

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'"

done8、检查集群状态

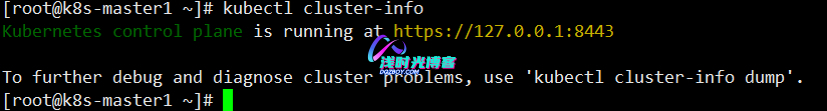

[root@k8s-master1 ~]# kubectl cluster-info

七、部署高可用kube-controller-manager集群

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用时,阻塞的节点将再次进行选举产生新的

文章来源(Source):浅时光博客 leader 节点,从而保证服务的可用性。

1、创建 kube-controller-manager 证书和私钥

1.1:创建证书签名请求

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.66.62",

"192.168.66.63",

"192.168.66.64"

],

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:kube-controller-manager",

"OU": "dqz"

}

]

}

EOF1.2:生成证书和私钥

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager1.3:将生成的证书和私钥分发到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager*.pem root@${node_ip}:/etc/kubernetes/cert/

done2、创建和分发 kubeconfig 文件

2.1:创建kubeconfig 文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server="${KUBE_APISERVER}" \

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master1 work]# kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master1 work]# kubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master1 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig2.2:分发 kubeconfig 到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig root@${node_ip}:/etc/kubernetes/kube-controller-manager.kubeconfig

done3、创建 kube-controller-manager syste文章来源(Source):https://dqzboy.com md unit 模板文件

[root@k8s-master1 work]# cat > kube-controller-manager.service.template <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${K8S_DIR}/kube-controller-manager

ExecStart=/opt/k8s/bin/kube-controller-manager \\

--profiling \\

--cluster-name=kubernetes \\

--controllers=*,bootstrapsigner,tokencleaner \\

--kube-api-qps=1000 \\

--kube-api-burst=2000 \\

--leader-elect=true \\

--use-service-account-credentials=true \\

--concurrent-service-syncs=2 \\

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\

--authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-allowed-names="aggregator" \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

--cluster-signing-duration=876000h \\

--horizontal-pod-autoscaler-sync-period=10s \\

--concurrent-deployment-syncs=10 \\

--concurrent-gc-syncs=30 \\

--node-cidr-mask-size=24 \\

--service-cluster-ip-range=${SERVICE_CIDR} \\

--pod-eviction-timeout=6m \\

--terminated-pod-gc-threshold=10000 \\

--root-ca-file=/etc/kubernetes/cert/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--v=4

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF为各Master节点创建和分发 kube-controller-mananger systemd unit 文件

[root@k8s-master1 work]# for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${MASTER_IPS[i]}/" kube-controller-manager.service.template > kube-controller-manager-${NODE_IPS[i]}.service

done

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-controller-manager.service

done4、启动kube-controller-manager服务

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done5、检查服务运行状态

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-controller-manager|grep Active"

done6、查看输出的 metrics

curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://192.168.66.62:10257/metrics |head7、检查集群状态

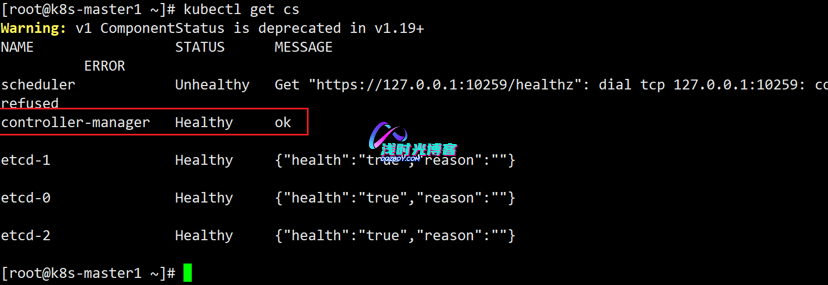

[root@k8s-master1 work]# kubectl get cs

8、测试 kube-controller-manager 集群的高可用

将日志级别改为

--v=4,然后停掉一个或两个节点的 kube-controller-manager 服务,观察其它节点的日志,看是否获取了 leader 权限。

八、部署高可用 kube-scheduler 集群

1、创建 kube-scheduler 证书和私钥

1.1:创建证书签名请求

[root@k8s-master1 work]# cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.66.62",

"192.168.66.63",

"192.168.66.64"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:kube-scheduler",

"OU": "dqz"

}

]

}

EOF1.2:生成证书和私钥

[root@k8s-master1 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

[root@k8s-master1 work]# ls kube-scheduler*pem

kube-scheduler-key.pem kube-scheduler.pem1.3:将生成的证书和私钥分发到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler*.pem root@${node_ip}:/etc/kubernetes/cert/

done2、创建和分发 kubeconfig 文件

2.1:创建kuberconfig文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server="${KUBE_APISERVER}" \

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master1 work]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master1 work]# kubectl config set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master1 work]# kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig2.2:分发 kubeconfig 到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.kubeconfig root@${node_ip}:/etc/kubernetes/kube-scheduler.kubeconfig

done3、创建 kube-scheduler 配置文件

3.1:创建kube-scheduler配置文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat >kube-scheduler.yaml <<EOF

apiVersion: kubescheduler.config.k8s.io/v1beta2

kind: KubeSchedulerConfiguration

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-scheduler.kubeconfig"

qps: 100

enableContentionProfiling: false

enableProfiling: true

healthzBindAddress: ""

leaderElection:

leaderElect: true

metricsBindAddress: ""

EOF3.2:分发 kube-scheduler 配置文件到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.yaml root@${node_ip}:/etc/kubernetes/

done4、创建 kube-scheduler systemd unit 模板文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat > kube-scheduler.service.template <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${K8S_DIR}/kube-scheduler

ExecStart=/opt/k8s/bin/kube-scheduler \\

--config=/etc/kubernetes/kube-scheduler.yaml \\

--bind-address=0.0.0.0 \\

--leader-elect=true \\

--tls-cert-file=/etc/kubernetes/cert/kube-scheduler.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-scheduler-key.pem \\

--authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-allowed-names="aggregator" \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--v=4

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF5、为各节点创建和分发 kube-scheduler systemd unit 文件

[root@k8s-master1 work]# for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${MASTER_IPS[i]}/" kube-scheduler.service.template > kube-scheduler-${MASTER_IPS[i]}.service

done

[root@k8s-master1 work]# ls kube-scheduler*.service- 分发

systemd unit文件到所有 master 节点

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-scheduler.service

done6、启动kube-scheduler 服务

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-scheduler"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler"

done7、检查服务运行状态

[root@k8s-master1 work]# for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-scheduler|grep Active"

done

8、查看集群状态

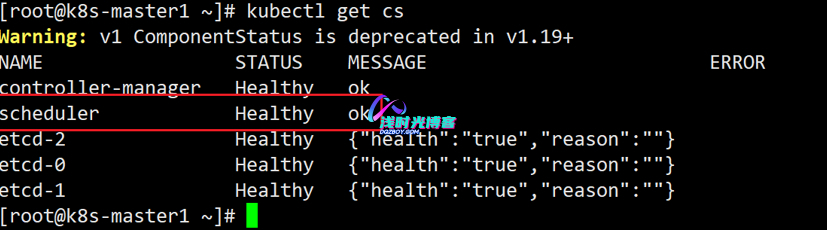

[root@k8s-master1 work]# kubectl get cs

9、测试kube-scheduler集群高可用

将日志级别改为

--v=4,然后停掉一个或两个节点的 kube-scheduler 服务,观察其它节点的日志,看是否获取了 leader 权限。

调理清楚,值得学习

一路到这里了,全文无坑

感谢分享 今天跟着学第五步!

感谢分享。学习

感谢分享 今天跟着学第五步!

学习下,感谢博主分享

感谢楼主分享

感谢分享,很详细

学废了学废了

谢谢分享,学习了