九、部署containerd

部署containerd请参考下面本博客之前写的文章,这里就不再重复描述部署流程了!

十、部署 kubelet 组件

1、创建 ku文章来源(Source):https://dqzboy.com belet bootstrap kubeconfig 文件

Bootstrappong:为Node节点自动颁发证书,也就是给kubelet颁发所使用的证书;由于K8S主节点一般为固定的,而Node节点会做增加、删除或者故障恢复等操作需要证书,而kubelet证书是与主机名进行绑定的,如果手动管理证书会十分麻烦

1.1:创建文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

# 创建 token

export BOOTSTRAP_TOKEN=$(kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig ~/.kube/config)

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

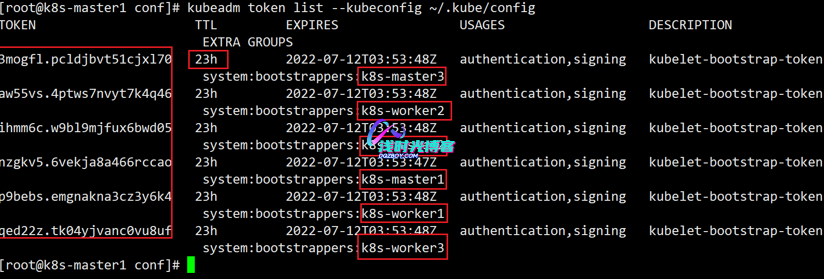

done1.2:查看 kubeadm 为各节点创建的 token

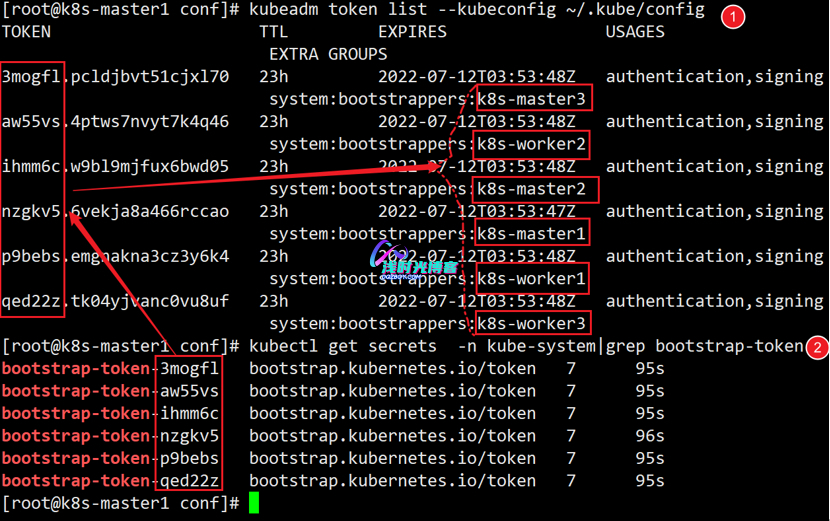

[root@k8s-master1 work]# kubeadm token list --kubeconfig ~/.kube/config

1.3:查看各 token 关联的 Secret

[root@k8s-master1 work]# kubectl get secrets -n kube-system|grep bootstrap-token

2、分发 bootstrap kubeconfig 文件到所有节点

[root@k8s-master1 work]# for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig root@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done3、创建和分发 kubelet 参数配置文件

3.1:创建 kubelet 参数配置模板文件

[root@k8s-master1 work]# cat > kubelet-config.yaml.template <<EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: "##NODE_IP##"

staticPodPath: ""

syncFrequency: 1m

fileCheckFrequency: 20s

httpCheckFrequency: 20s

staticPodURL: ""

port: 10250

readOnlyPort: 0

rotateCertificates: true

serverTLSBootstrap: true

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/etc/kubernetes/cert/ca.pem"

authorization:

mode: Webhook

registryPullQPS: 0

registryBurst: 20

eventRecordQPS: 0

eventBurst: 20

enableDebuggingHandlers: true

enableContentionProfiling: true

healthzPort: 10248

healthzBindAddress: "##NODE_IP##"

clusterDomain: "${CLUSTER_DNS_DOMAIN}"

clusterDNS:

- "${CLUSTER_DNS_SVC_IP}"

nodeStatusUpdateFrequency: 10s

nodeStatusReportFrequency: 1m

imageMinimumGCAge: 2m

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

volumeStatsAggPeriod: 1m

kubeletCgroups: ""

systemCgroups: ""

cgroupRoot: ""

cgroupsPerQOS: true

cgroupDriver: cgroupfs

runtimeRequestTimeout: 10m

hairpinMode: promiscuous-bridge

maxPods: 220

podCIDR: "${CLUSTER_CIDR}"

podPidsLimit: -1

resolvConf: /etc/resolv.conf

maxOpenFiles: 1000000

kubeAPIQPS: 1000

kubeAPIBurst: 2000

serializeImagePulls: false

evictionHard:

memory.available: "100Mi"

nodefs.available: "10%"

nodefs.inodesFree: "5%"

imagefs.available: "15%"

evictionSoft: {}

enableControllerAttachDetach: true

failSwapOn: true

containerLogMaxSize: 20Mi

containerLogMaxFiles: 10

systemReserved: {}

kubeReserved: {}

systemReservedCgroup: ""

kubeReservedCgroup: ""

enforceNodeAllocatable: ["pods"]

EOF

3.2:为各节点创建和分发 kubelet 配置文件

[root@k8s-master1 work]# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet-config.yaml.template > kubelet-config-${node_ip}.yaml.template

scp kubelet-config-${node_ip}.yaml.template root@${node_ip}:/etc/kubernetes/kubelet-config.yaml

done4、创建和分发 kubelet systemd unit 文件

4.1:创建 kubelet systemd unit 文件模板

[root@k8s-master1 work]# cat > kubelet.service.template <<EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

WorkingDirectory=${K8S_DIR}/kubelet

ExecStart=/opt/k8s/bin/kubelet \\

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\

--cert-dir=/etc/kubernetes/cert \\

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \\

--root-dir=${K8S_DIR}/kubelet \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet-config.yaml \\

--hostname-override=##NODE_NAME## \\

--authentication-token-webhook=true \\

--authorization-mode=Webhook \\

--cgroup-driver=systemd \\

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

注意:以下参数在K8s 1.24版本中已经跟随dockershim一起删除了;如果你部署的是K8s 1.24版本,那么下面的这几个参数直接从kubelet的启动配置文件中删除即可!!!

--network-plugin(已弃用:将与 dockershim 一起删除。)--cni-conf-dir默认值:/etc/cni/net.d(已弃用:将与 dockershim 一起删除。)--image-pull-progress-deadline持续时间 默认值:1m0s(已弃用:将与 dockershim 一起删除。)--container-runtime=remote(已弃用)

4.2:为各节点创建和分发 kubelet systemd unit 文件

[root@k8s-master1 work]# for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

done5、授予 kube-apiserver 访问 kubelet API 的权限

[root@k8s-master1 work]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes-master6、Bootstrap Token Auth 和授予权限

[root@k8s-master1 work]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers7、自动 approve CSR 请求,生成 kubelet client 证书

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat > csr-crb.yaml <<EOF

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# To let a node of the group "system:nodes" renew its own credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

# A ClusterRole which instructs the CSR approver to approve a node requesting a

# serving cert matching its client cert.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: approve-node-server-renewal-csr

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

---

# To let a node of the group "system:nodes" renew its own server credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: approve-node-server-renewal-csr

apiGroup: rbac.authorization.k8s.io

EOF

[root@k8s-master1 work]# kubectl apply -f csr-crb.yaml8、启文章来源(Source):浅时光博客 动 kubelet 服务

[root@k8s-master1 work]# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done

十一、部署 kube-proxy 组件

1、创建 kube-proxy 证书

1.1:创建证书签名请求

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "dqz"

}

]

}

EOF1.2:生成证书和私钥

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@k8s-master1 work]# ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem2、创建和分发 kubeconfig 文件

2.1:创建kubeconfig文件

[root@k8s-master1 work]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig2.2:分发kubeconfig文件

[root@k8s-master1 work]# for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.kubeconfig root@${node_name}:/etc/kubernetes/

done3、创建 kube-proxy 配置文件

3.1:创建 kube-proxy config 文件模板

[root@k8s-master1 work]# cat > kube-proxy-config.yaml.template <<EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

qps: 100

bindAddress: ##NODE_IP##

healthzBindAddress: ##NODE_IP##:10256

metricsBindAddress: ##NODE_IP##:10249

enableProfiling: true

clusterCIDR: ${CLUSTER_CIDR}

hostnameOverride: ##NODE_NAME##

mode: "ipvs"

portRange: ""

iptables:

masqueradeAll: false

ipvs:

scheduler: rr

excludeCIDRs: []

EOF3.2:为集群所有节点创建和分发 kube-proxy 配置文件

[root@k8s-master1 work]# for (( i=0; i < 6; i++ ))

do

echo ">>> ${NODE_NAMES[i]}"

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-proxy-config.yaml.template > kube-proxy-config-${NODE_NAMES[i]}.yaml.template

scp kube-proxy-config-${NODE_NAMES[i]}.yaml.template root@${NODE_NAMES[i]}:/etc/kubernetes/kube-proxy-config.yaml

done4、创建和分发 kube-proxy systemd unit 文件

4.1:创建文件

[root@k8s-master1 ~]# cd /opt/k8s/work

[root@k8s-master1 work]# cat > kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=${K8S_DIR}/kube-proxy

ExecStart=/opt/k8s/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy-config.yaml \\

--logtostderr=true \\

--v=4

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

4.2:分发文件

[root@k8s-master1 work]# for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.service root@${node_name}:/etc/systemd/system/

done5、启动kube-proxy服务

[root@k8s-master1 work]# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-proxy"

ssh root@${node_ip} "modprobe ip_vs_rr"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy"

done6、检查启动结果

[root@k8s-master1 work]# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-proxy|grep Active"

done7、查看 ipvs 路由规则

[root@k8s-master1 work]# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "/usr/sbin/ipvsadm -ln"

done

>>> 192.168.66.62

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0

>>> 192.168.66.63

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0

>>> 192.168.66.64

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0

>>> 192.168.66.65

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0

>>> 192.168.66.66

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0

>>> 192.168.66.67

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 192.168.66.62:6443 Masq 1 0 0

-> 192.168.66.63:6443 Masq 1 0 0

-> 192.168.66.64:6443 Masq 1 0 0 十二、部署 Calico 网络

请参考本博客下面文章中的第6节内容,进行部署Calico网络插件

十三、部署集群插件

请参考本博客下面文章中的内容,进行部署集群插件

十四、检查K8s集群状态

[root@k8s-master1 work]# kubectl get nodes

感谢大神ganxie

感谢大神ganxie

感谢大神牛牛牛

感谢6666

感谢分享!

这个K8S最近在弄 领导说要监控起来 我按照这个文章部署一下 !

牛牛牛牛牛牛·

学习了 …..

感谢分享,很详细

学习学习。。